Exploiting cyclic symmetry in convolutional neural networks

08 Feb 2016

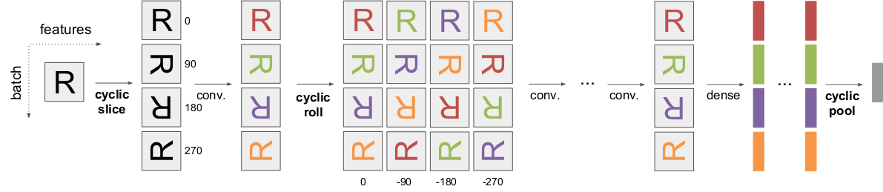

Schematic representation of the effect of the our proposed operations, cyclic slice, roll and pool, on the feature maps in a convnet.

We propose some simple, plug-and-play operations for convolutional neural networks that allows them to be partially equivariant or invariant to rotations.

Sander Dieleman, Jeffrey De Fauw, Koray Kavukcuoglu

Accepted for publication at ICML 2016.

Abstract

Many classes of images exhibit rotational symmetry. Convolutional neural networks are sometimes trained using data augmentation to exploit this, but they are still required to learn the rotation equivariance properties from the data. Encoding these properties into the network architecture, as we are already used to doing for translation equivariance by using convolutional layers, could result in a more efficient use of the parameter budget by relieving the model from learning them. We introduce four operations which can be inserted into neural network models as layers, and which can be combined to make these models partially equivariant to rotations. They also enable parameter sharing across different orientations. We evaluate the effect of these architectural modifications on three datasets which exhibit rotational symmetry and demonstrate improved performance with smaller models.